When Machines Change Art

At a few times in history, new technologies came along that changed the way we make art. Machines, chemicals, and/or algorithms replaced some of the steps that artists did, changing how we made art—and, sometimes, radically transforming what we thought think art is.

Now seems like one of those times.

When change happens, it’s surprising, and it’s tempting to see it in extremes: the new technology is either going to improve everything or ruin everything. But the reality of how past technologies changed art is nuanced and complex, with different effects for different communities in the short-range and then the long-range.

In this post, I’m specifically focused on technologies that automate (or seem to automate) steps of the artistic process. What can past examples of such technologies changing art have in common, and do they have lessons for the current moment?

My main thesis is:

| New art-making technologies change art in consistent ways, and studying the past helps us understand how things will change in the future. |

This blog post is an attempt to identify these trends in past examples, and to judge if and how they’re relevant for “AI” art. By understanding and recognizing patterns in new art-making technologies, we can understand and respond to them better. Maybe the future won’t repeat the past, but this history can help avoid the superficial takes that treat this as something completely unprecedented and new. Changes like this have happened before.

As always, opinions on this blog post are mine alone, and not those of any institution I’m affiliated with.

Effects of technological change are complicated

A major theme of my writing related to technology and art—and to the possible impacts of “AI” on art—is that these phenomena have complex effects. I’m fond of Melvin Kranzberg’s writings on the complexity of technological change; his first law says “Technology is neither good nor bad; nor is it neutral, and he described examples around how industrialization and pesticides had been beneficial in some ways and negative in other situations. I came across his writing through danah boyd’s thesis on the new trend of teenagers’ use of social media, and their parents fear of it, later published as It’s Complicated.

But, like most discourses around technology, the discourse around “AI” and art tends to vastly oversimplify it into one of two extremes: “AI will democratize art,” or “AI will kill art and will decimate jobs for real artists.” These extremes are both absurd and counterproductive to real understanding; they will lead to bad policy and/or preparing people poorly for the future.

In boyd’s words: “Both extremes depend on a form of magical thinking scholars call technological determinism. Utopian and dystopian views assume that technologies possess intrinsic powers that affect all people in all situations the same way. Utopian rhetoric assumes that when a particular technology is broadly adopted it will transform society in magnificent ways, while dystopian visions focus on all of the terrible things that will happen because of the widespread adoption of a particular technology that ruins everything. These extreme rhetorics are equally unhelpful in understanding what actually happens when new technologies are broadly adopted. Reality is nuanced and messy, full of pros and cons.”

(My essay was most immediately inspired by reading a paper by Amy Orben, and seeing the value in finding the themes and trends in past technological developments.)

Background

Before getting to the main topics, I want to reiterate some views on “AI:” it is a set of technological developments, nothing more, nothing less. So it is useful to understand in the context of other past technological developments. Feel free to skip ahead to the next section if this is all familiar to you.

Computers are not artists

This post is a follow-up to my 2018 article “Can Computers Create Art” and talk, in which I argue that computers will (almost certainly) never be artists and they will never make artists obsolete. People have been making art with computers for nearly 60 years, some by writing code that makes pictures, and others by painting and animating with digital tools, and I described how each of these examples are authored by artists, and controlled by artists.

Nothing fundamental has changed with the latest developments: the latest algorithms do not “automate creativity” or make artists obsolete—they are computer software tools used by humans to make art. If these ideas are unfamiliar you may wish to read my previous paper or watch the talk, especially if the past year’s diffusion models are your first exposure to generative computer art.

I put “AI” in quotes because these things are not intelligent, they’re just a class of computer software and algorithms.

Identifying the real risks

In the past year, many working artists have publicly shared intense fear and anger over new “AI” technologies. They’ve spoken about fear over the threat of “AI” to them: threats to their livelihoods, professional identities (through associating their name with prompts), as well as reusing their personal styles, and their anger at models being trained on their artwork without consent and the perception that “AI” researchers are trying to automate creativity to make human artists obsolete. This is mainly relevant to certain classes of professionals like concept artists and illustrators.

These threats to livehoods are real, the fear is valid and legitimate, and the potential harms need to be addressed.

Addressing threats requires identifying them correctly. One of the goals of this post is to look at similar historical examples to help understand the threats. Focusing on the wrong problem leads to bad solutions and fruitless arguments.

Arguments that the latest “AI” models will “kill art” or “make artists obsolete” or “rob artists of creative contol” they will grow more and more unconvincing as these creative tools mature and more people use them; these same claims have never been true when people said the exact same things about previous technologies. The abstract notion of a threat to “art itself” doesn’t lead to solutions, just fruitless arguments about the definition of art.

The ethical discussion should focus on threats to current working artists, such as threats to employment, and the others listed above. I believe that studying history can provide examples of how some of these harms play out and how to respond to them, though I don’t attempt to say anything to say about solutions here.

Conversely, the potential impact on peoples’ lives shouldn’t be dismissed just by pointing to the long march of progress.

What is “AI” art?

In this article, I’m primarily focused on recent neural “AI” art, which I divide into two waves (so far). The First Neural “AI” Art Wave lasted 2015–2021 and included DeepDream to GANs and early text-to-image; “AI” artists did their own coding, built on open-source tools, and, in some cases, built their own datasets. In the nascent Second Wave (2022–), artists are experimenting with general purpose text-to-image interfaces or chatbots without doing their own coding or building their own datasets; these models often rely on proprietary code and/or datasets, although there are still some people building their own systems to make art.

“AI” art isn’t just one thing: these tools offer both faster ways to make what we can already make, and new types of media. Even so, changing the way you make pictures changes the pictures you’ll make. Much of the discussion this year is about text-to-image, but I believe this is a temporary stage; these things are going to continue evolving very quickly.

| Although most of the energy these days focuses on text-to-image, “AI” art is much more than just those techniques, and they will continue to evolve and change in the future. This post is not specifically about text-to-image. |

I would define “AI art” as any art primarily made with the tools that we happen to be calling “artificial intelligence” at the time. “AI” art has been around since the 1960s, e.g., Harold Cohen and Karl Sims. One could also include art about “AI”—whether or not it uses “AI”—like “Signs of the Times,” “ImageNetRoulette,” and “White Collar”. But this post is focused on the neural generative stuff.

These definitions will become increasingly blurry, as “AI” tools become more and more integrated into everyones’ workflows, the way there’s already some “AI” in everyones’ smartphone cameras. Many of the techniques used in digital imaging today might have been called “AI” a few decades ago.

This is a work-in-progress

Academically, this post is a collision of everything from computer science and art history to media studies to disruptive innovation to labor economics, and no one of these disciplines seems sufficient to cover the topic. I have a lot to learn about this topic; there’s already more stuff on my reading list.

Here’s an earlier, shorter presentation I gave on this (starts at 20:33 in the video):

1. It begins with experimentation

We have so many artistic technologies that we take for granted, but each of them had to be invented, developed, and figured out, and this took years, often decades.

New artistic tools are created by a small group of experimenters. These experimenters are often tinkerers and hobbyists; there’s a special kind of artist that prefers to work in these borderlands spaces, to mix their own chemicals or write their own code. Their early artworks are experiments in both science and technology, seeing both what’s technically possible and what’s artistically intriguing.

Photography was developed by isolated tinkerers like Talbot and Daguerre. Nowadays we might think of photography as a thing that was just invented by someone long ago, but it really arose from a fertile and complex set of many experiments and attempts to figure out how to build the technology, and many of those attempts are forgotten.

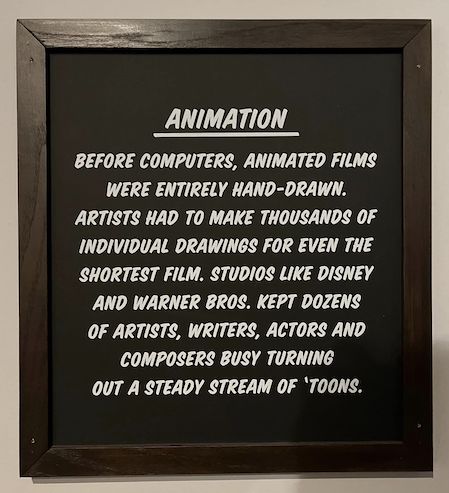

Animation began with experiments like Winsor McCay’s Gertie The Dinosaur, and the inventive explorations of the Fleischer brothers and Walt Disney. Disney developed the art and science of animation in a focused decade-long drive, culminating in the movie Snow White in 1937. The first fine-artists who used computers in the 1960s, people like Frieder Nake, were playing around with very primitive computing tools, before computer monitors or “computer code” even existed.

Advances in existing media can “reset” things to an experimental stage

In 1929, the critic Rudolf Arnheim wrote about the trouble people were having in figuring out how to use sound in movies, and the trouble audiences had in adapting to it. Arnheim later wrote that the invention of sound film temporarily made movies worse because of all this experimentation.

The early days of computer animation likewise involved numerous experiments building on traditional animation. Notably, “Toy Story” in 1995 culminated more than a decade of experimentation at Pixar and ILM, similar to how “Snow White” in 1937 culminated a decade of experimentation and development at Disney.

These early explorations are largely forgotten

We mostly seem to think of our existing art forms as things that have always existed, fully formed, in their present form.

Before I started studying these things, I could not name a single significant photgrapher from the first 100 years of photography.

I doubt most people could name a single film from the first 30 years of movies: for most people, movies began with the famous pieces, such as “Citizen Kane,” “The Wizard of Oz,” or something even later. How many computer-based artworks could most people name before video games or “Toy Story,” or, do most people know about the long history of musique concrète prior to The Beatles and Grandmaster Flash?

This makes it too easy to diminish new artistic experimentations, to say that a new experiment isn’t as good as the best masterpieces of established media.

For now, “AI” Art is largely about novelty

During the First Neural “AI” Art Wave, “AI” art developed in a rapid cycle of new artists experimenting with new technologies as they emerge. Most “AI” artists needed some technical skill to find, download, run, and modify the latest experimental machine learning code shared by research labs. The best “AI” art came from artists building their own training datasets (e.g., Helena Sarin, Scott Eaton, Sofia Crespo) and/or directly modifying their models. Even “AI” artworks with a message were still very experimental.

With the new easy-to-use text-to-image models, we’re now in a new wave of people exploring these models and what they can do, and still a heavy wave of experimentation. These things are exciting because they are novel. I spent a fascinating month in May 2022 playing with DALL-E (until I lost interest in playing with it).

When people (like art critic Ben Davis) accuse “AI” art of being focused on novelty, I say: of course it is. The dominant aesthetic of any new art-making technology is novelty. The only way you figure out art in a new medium is by experimentation. And, for many of us, it’s exciting to be there at the beginning, to know that something big is happening, even if you don’t know what.

2. New media first mimics existing media, then evolves

The artists who first experiment with a medium often begin by mimicking existing new media. It takes a long time—sometimes decades—to figure out the unique properties of the new medium.

Photography

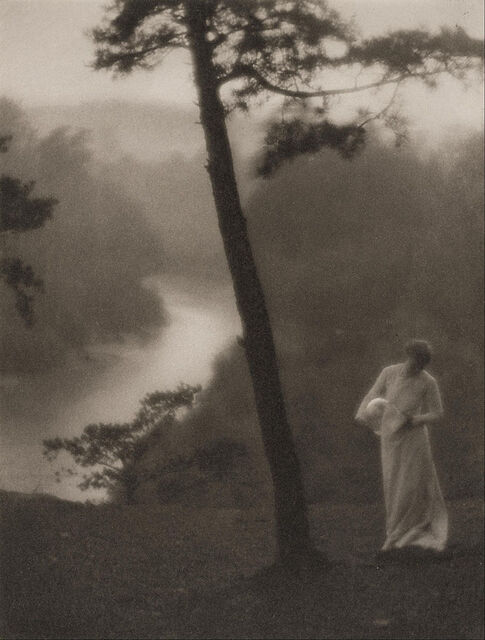

As a way of arguing for the artistic merits of photography, early Pictorialist photographers mimicked traditional painting:

As photography matured, some painters decided that this kind of realism wasn’t actually art, and created more hazy, impressionistic images, like Whister’s Nocturne—which the Pictorialists then mimicked:

Two developments allowed photography to move beyond mimickry in the early 20th Century. First, the growth of Modern Art both shifted the aesthetic cutting edge; “art” was no longer about illusionistic painting. Second, photography had been recognized as an art form by major institutions. Photographers like Group f/64 began to explore new directions, considering the unique properties of the photography as a medium, rather than trying to repeat past styles.

Cinema

In 1895, the Lumière brothers made some of the first moving pictures:

It doesn’t seem like much now, but, at the time, it must have been so amazing just to see the pictures move at all.

Within a decade, people were telling stories with film. The early movies were much like filmed stage-plays, with a static camera, and actors making big gestures like Vaudevillians. It took several decades for artists to develop the language of film, including editing and camera techniques. Here’s a clip of media scholar Janet Murray summarizing this history:

(sorry for the looping)

Cinema was invented in the 1890s, but the first movies we now think of as masterpieces arrived decades later, like Modern Times (1936), Snow White (1937), and Citizen Kane (1941). (Only if you are a film buff you can probably think of great films that came out earlier, like Buster Keaton’s “The General” from 1927 or “Nosferatu”.)

Hip-Hop

Or, consider the way hip-hop began as house party music, repurposing funk records with turntable and fader. The earliest rappers were inspired by comedians and poets. Then the DJs started scratching

and turntablism and remixing and sampling culture went far far from that original party music, into all sorts of far-out directions like jungle music.

Computer art

When people starting making art with computers, they consciously mimicked abstract artworks, as in A. Michael Noll’s 1964 Computer Composition with Lines (based on a Mondrian painting) and Frieder Nake’s 1965 Hommage à Paul Klee, inspired by a Klee painting.

Does “AI Art” start out by mimicking?

Imitation runs throughout the history of “AI” art. Most commonly, style transfer explicitly mimics existing artists, with lots of “Van Gogh” and “Picasso” styles, and newer diffusion has a lot of “in the style of”. I would argue that, in attempting to mimic existing styles, they are still producing new styles; a picture “in the style of a Van Gogh” isn’t actually in the style of Van Gogh, for many reasons. More generally, aesthetically, much of this second wave of “AI” art is about mimicking existing forms, whether traditional portraits or movie posters and so on, but also much of it also looks very new.

History teaches that this simulation is temporary: people aren’t going to want “paintings in the style of Van Gogh” for very long (if they ever do). “AI” art may evolve into something very different.

One could also make the case that the popularity of visual indeterminacy in GAN Art refers to indeterminate visuals in Modern art, although without any specific stylistic reference.

3. The Artistic Backlash (“This will kill art / This is Not Art”)

When a new machine for art emerges, an artistic backlash responds, claiming that a new machine for making art produces inherently inferior art. Versions of this claim appear are that this new thing will “kill art,” this is “not art,” this will lead to dumbed-down and “soulless” art that will crowd out the good stuff, and so on. (I’ll discuss other aspects of backlash later.)

In the 16th century, Michaelangelo felt that the new oil paint technology was for amateurs, because you can “correct” things with oil, unlike with fresco.

In the earliest demonstrations of photography (the Daguerotype) in 1838, painters like Paul Delaroche and J. M. W. Turner reacted that “This is the end of art.” Twenty years later, in a review of a photography salon, the poet Charles Baudelaire wrote that “If photography is allowed to supplement art in some of its functions, it will soon supplant or corrupt it altogether, thanks to the stupidity of the multitude which is its natural ally.”

In the early days of sound movies, people like Charlie Chaplin fought against it; Adolph Zukor, the president of Paramount, said “The so-called sound film shall never displace silent film. I believe as before that our future lies with silent film!” (For a superbly entertaining—but not very educational—take on the transition to talkies, watch the great “Singin’ in the Rain”.)

In 1982, the UK Musicians Union banned electronic drum machines and synthesizers as a threat to more-traditional musicians.

Impossible or inappropriate standards

Critics often hold these experimental new forms to impossible standards. I often read things like “AI art is no good because it hasn’t give me the same experience as a Rembrandt does”, or whatever once provided the author with a profound formative experience.

These responses often seem like status quo bias, judging a new artform as inferior because it doesn’t reach the highest standards of the current form, rather than trying to gauge its future potential as new, different artistic medium.

In respected novelist and activist Wendell Berry’s 1987 essay on why he will never buy a computer, he wrote—with obvious skepticism—that he will only respect computers as a writing tool when someone uses a computer to produce a work of art comparable to Dante. In 2010, film critic Roger Ebert wrote that video games could never be art, because no existing game was worthy of comparison to the great historical masterpieces.

It sounds a lot like “disruptive innovation”, where established players dismiss new technology because it doesn’t perform as well as the old thing according to the old thing’s standards. But, because it’s cheaper and easier than the old thing, it serves more customers and eventually surpasses the old thing. It turns out that the old standards were just heuristics.

Mediocre forms fade away

This article so far could be accused of selection bias: I’m mostly focusing on inventions that ended up being wildly successful and influential. What about ones that didn’t? These, I argue, generally faded away on their own.

Not all technology has been great for art, but when a technology is gimmicky and/or artistically-limited—like Smell-o-Vision or autotune—it doesn’t last.

3D cinema is a more complex case. I’ve seen a few movies where the 3D cinematography felt like it added something to the storytelling and the experience. But, so far, it doesn’t seem like the benefit is enough to justify the cost and the drawbacks. Despite a considerable push from studios and theatres, audiences have not shown up for 3D cinema enough to keep it alive.

At this early stage in “AI” Art, the weirdness is the point

Some of the complaints about “AI” art complain about it looking “off,” lacking the personal touch of hand-drawn art. It doesn’t look like what we’re used to; it doesn’t work by the traditional standards of experts. It looks weird.

The weirdness is what makes it exciting; it’s a sign that there’s something new going on here, and we don’t yet quite know what it is. It’s a sign that “AI” art isn’t quite the same artform or tool as before, and that it functions subtly differently. This kind of weirdness only appeals to certain kinds of people, as exemplified by Janelle Shane’s blog “AI weirdness”.

Does technology make inferior art?

I cannot think of a single example of a new artistic tool or medium that “kills” art by making “bad” art. Many times over the past two centuries people have claimed that some new medium like photography is going to kill art. They had good reasons at the time, but they were never right.

I don’t take these claims about “AI” art seriously, because this kind of claim has never ever been true in the past, and I haven’t seen any serious attempt to argue why this time is somehow different. By “serious,” I mean grounded both in how these tools actually work and in how art techniques evolve historically, rather than just status quo bias. These claims are often based on science fiction notions of how “AI” works, or that the “AI” will be the artists, which I likewise find implausible and ahistorical. At worst, it’ll be a fad, but never the death of art.

For example, people have long argued that television made inferior art, that television programming was “a vast wasteland” and that it dumbed-down discourse. But there have been great television shows: great artistic achievements that couldn’t have happened in the same way in any other media. (I recommend Emily Nussbaum’s defense of TV as an art form.) Perhaps television has been harmful to society in some ways (e.g., a certain Reality TV president), but it is not artistically inferior to theatre, cinema, or literature, and great TV is truly great.

Can you think of a new artistic tool or medium that, decades later, we unambiguously regret inventing as an artistic tool? I cannot.

4. Shifting employment (“This will kill jobs for artists”)

What is the effect of new artistic technology on artistic jobs? Despite the various simplisticated narratives, it’s a really complex question, because new technologies can change the nature of artistic work (causing conflicting notions of “what an artist is”), and the short-range effects can look very different from the long-range effects. Moreover, while this post is primarily about artists’ tools (like photography), the topic overlaps considerably with new distribution mechanisms (like sound recording and online streaming), that have different-but-related effects.

The effect of technology on jobs in general is a huge topic with a long history. We get the term “Luddite” from texture workers that sabotaged new machines that replaced their jobs. (We also get the word sabotage from a similar source.)

Photography

In the 19th Century, photography replaced the need for portrait painters and the inferior silhouette techniques. But they didn’t all go out of work; many converted their portrait studios into photography studios. Photography also replaced practical drafting skills for architecture and record-keeping, and displaced the veduta painters that sold who postcards to travelers in Italy.

We have fewer painters today, but many, many more people making pictures in one way or another, and many more people making pictorial art.

If, in 1840, you asked “will this hurt jobs for artists?”: the answer would be “yes,” because “artists” were, solely, people who painted physical pictures with real paint (or sculpted, or wrote, and so on). But, according to the much broader class of things we call “art” today, the answer would be No.

Cinema

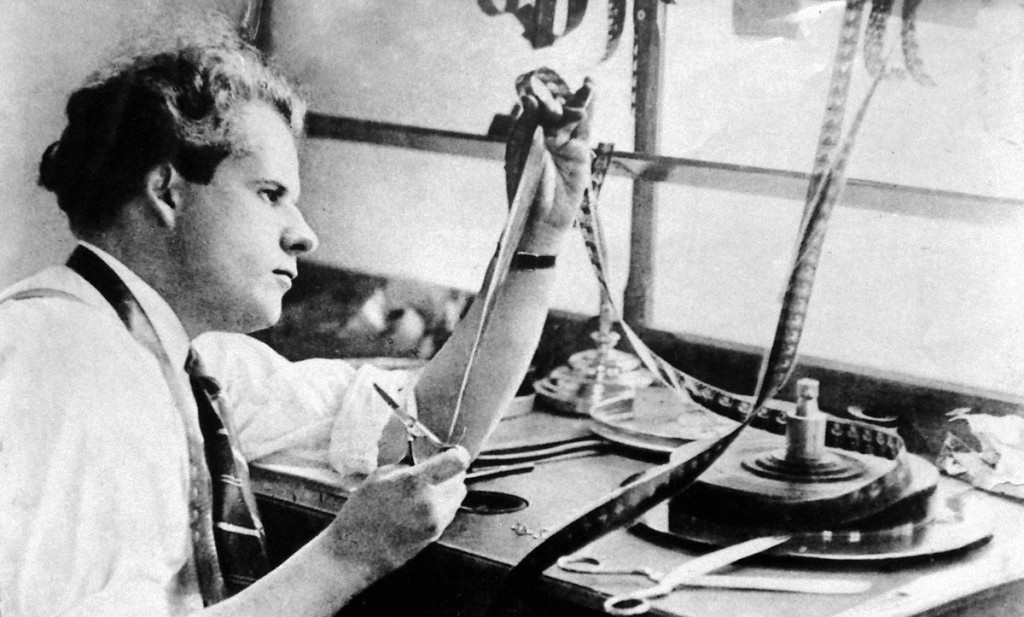

Cinema, in its early days, required big bulky cameras and lights, and managing physical celluloid film.

Film was developed on celluloid strips, and editing movies required physically cutting strips of film with scissors and taping them together; just managing all these strips of film was a lot of work. By the 80s, an indie filmmaker could do their own cinematography; digital video editing replaced physical strips of film with files around on a computer. The physical labor required for cinematography and film editing continually decreased, but the artistic decisions remained.

Nowadays, many of the videos we watch are made by a single person filming and editing on their phone and sharing on social media. Filmmaking has gone from a massive, multiperson effort to something you can do alone in your living room. More people are making video—and sharing them on social media—than ever before.

Yet, big budget filmmaking and TV are still massive enterprises, huge labor-intensive productions engaging large numbers of skilled crew and technicians. As the technology has gotten better, the scale and breadth of filmmaking has expanded dramatically.

Nowadays, this can all be done on a smartphone. But big budget, expensive productions with large crews still make movies—and, now, also, many expensive TV shows as well.

Recorded music

When music recording was invented, musicians were worried it would take their jobs: why go listen to live music when you can buy a record and listen to it at home?

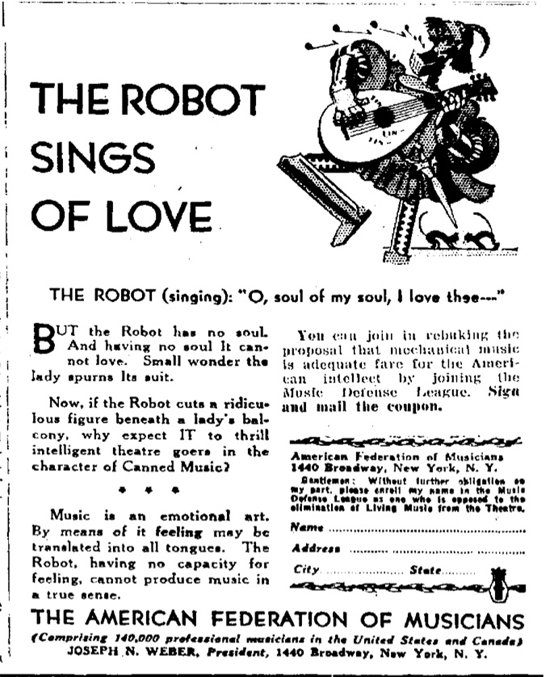

In the 1920s, the American Federation of Musicians (AFM) union decried music recording and forbid orchestras from recording albums, a move that led to the growth of recorded folk music.

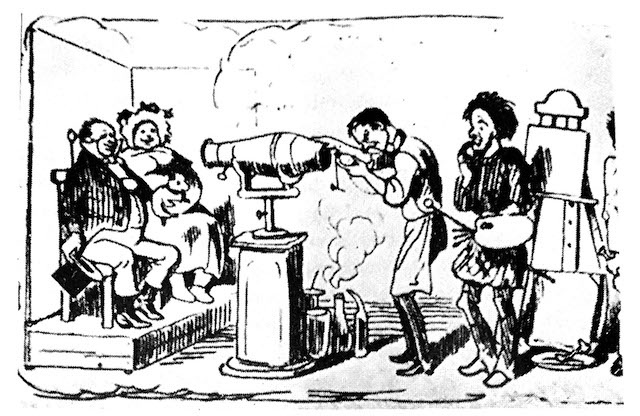

In 1927, the first movie with an audio soundtrack came out, and, within a few years, 20,000 live musicians lost their jobs performing in movie theatres. In response, the AFM launched a campaign of newspaper advertisements against movie soundtracks, including some illustrations that could still be used today:

Even if the one part of the profession was decimated (performing music in movie theatres), other roles for musicians have expanded greatly. Moreover, the use of movie soundtracks truly enlarged and invigorated the cinema artform: music specifically composed and recorded for the movie itself changed filmmaking, to say nothing of dialogue, sound effects, and so on.

Did recorded music cause the total number of musicians making a living from music go up or down (relative to the overall decline of the middle class)? I don’t know if numbers are available for this.

Lil Nas X is one of my favorite examples of how new technologies create new musical opportunities. At one point he was just some unemployed guy in his bedroom, finding beats online and rapping over them. He found a beat by some Dutch guy making beats in his attic—that he’d made by sampling a Nine Inch Nails song—and licensed it for $30; Lil Nas X shared his song “Old Town Road,” online, and it became huge… the megahit of 2019. This is not a path to success that many people can follow (online distribution platforms may lead to rich-get-richer effects), but online platforms can potentially allow many people to reach niche audiences as well.

Computer animation

In the 1980s, Disney animators were scared of computers, fearing that computers would replace them entirely. But then Pixar built an extraordinarily-successful business of making computer-animated movies—directed, written, and animated by a large studio of enormously-talented artists. The computer didn’t do the animation, it was a new animation tool.

By the early 2000s, Disney interpreted their traditional cel animation business, together with Pixar’s success, as signalling the death of traditional cel animation. They shut down cel animation in Burbank, and all traditional animators had to learn computer animation; the ones that couldn’t were laid off. Once Disney bought Pixar, Pixar’s leadership took over Disney animation and resurrected Disney cel animation, because they loved the traditional art form and Disney’s history. Yet, their first traditional animation wasn’t considered successful enough; audiences viewed it as old-fashioned. So Disney gave up on cel animation for good. Styles and tastes change, and technology is one of the driving factors.

Note that the skills involved in computer animation are quite similar to those in hand-drawn animation. The input devices are different, but both are essentially skills of performing through pictures. I remember hearing at one time that Pixar would hire for people who could animate well by hand rather than being trained in specific animation software.

Hand-drawn animation styles aren’t actually dead; they’re alive and well outside the US—though using computers heavily in the process. Sony’s fantastic Spider-Verse movies are an encouraging sign for stylistic innovation in American animation. (And the singular Cuphead brilliantly merges hand-animation with video games, plus an absolutely banging jazz soundtrack.)

Visual effects (VFX)

A similar transition occured in VFX: when ILM transitioned from model-making and stop-motion animation around the time of “Jurassic Park,” many of the traditional modelmakers resented and fear the digital painters and computer animation staff. Some animators made the transition, and some didn’t

But now, there are opportunities for many more animators than before, because 3D computer animation makes smaller projects much more feasible, and because computer animation is used throughout “live action” films. For example, “Avatar” is basically a computer-animated movie with occasional actors and sets. VFX industry jobs are a mess now, but this is more about the business than the technology; Walt Disney had an animators strike in 1941. Computer animation is wide-spread in video games, both little indie games and big AAA games; it’s common throughout television.

Distribution mechanisms

In this post I am mainly talking about artistic tools and media, but it’s also important to look at the adjacent topic of distribution media: the way new technologies change when, where, and how we buy and appreciate art also transform art. A lot of the sins that people assign to new technologies are really around the new business shifts that they enable.

The role of new distribution platforms, like recorded music, is a big topic with a long and complex history. The technologies that enable mass distribution also tend to enable consolidation of power unless antitrust mechanisms are enforced.

I recommend the book Chokepoint Capitalism on this topic. Here’s the chapter on the effects of online music streaming on artists.

It’s also worth noting that new distribution technology can enable new styles of art. The transition from live performance to recorded music—and then to music sold on Long Playing (LP) records—changed music, leading to things like album-oriented rock and TikTok remixes that are only possible with recorded media. Pink Floyd and Radiohead and Old Town Road couldn’t exist without recording technology.

The Short-Range versus the Long-Range

These debates over technology’s effects tend to mix up two different things: what happens to workers right now, and what happens over decades. It’s important to distinguish the short-range and long-range impacts, which can look quite different, and to consider both.

Short-range. I’ve heard a lot of past stories of traditional artists—like illustrators and designers and the Disney animators mentioned above—who were left behind by the transition to digital tools a few decades ago.

In the past year, many working artists have shared their genuine anxiety and fear over what these “AI” technologies mean for their livelihoods and identities. The fear is real, and, as illustrated above, the concerns are not without precedent.

What should be done to protect today’s artists? I do not know, but I believe it is fundamentally a labor issue, not an art issue. Unfortunately, we here in the US do not have much of a social safety net, so, if you lose your employment for whatever reason, even just bad luck, you can really be out in the cold. How do we as a society protect artists and build better buffers against change? New restrictions on how training data use could slow down progress.

Borrowing experience from past labor movements (particularly creative workers’ unions) seems relevant here. For example, in the 1930s, some musicians’ unions secured a fund for performance musicians put out of work by sound recording technology. I don’t have much expertise or advice here to offer. Again, I suggest the book Chokepoint Capitalism as offering some useful suggestions, and this more recent blog post that directly addresses generative “AI”.

Some working artists will be able to keep doing what they’re doing. Others will recognize ways that new tools enhance their processes. The future of art is not people typing words into a text-box, but artists integrating these tools into modified (or entirely new) workflows. People with artistic skill and experience will be best positioned to use these new tools as they evolved, provided they take the time to learn.

This won’t work for everyone, sadly.

(Are there examples where development of a promising new technology was deliberately halted? They’re naturally harder to find, which could lead to some survivorship bias. The only examples I can think of are electric cars and biological things like human cloning, but I’m not sure those are relevant here.)

In the long-range, A common thread in all these cases is that a traditional role shrinks (e.g., live performance musician, portrait painter) but the types of roles expands (e.g., recording artist, house music DJ, indie filmmaker). Creative tools are more available to nonexperts (e.g., home movies, consumer photography), which occasionally provides a starting point for kids growing up to be artists. Traditional roles remain, but are more rarified event (e.g., attending live music concerts, buying hand-drawn art). More artists’ workflows will use these “AI” techniques extensively.

I find it hard to imagine that the overall range of artistic opportunities or forms of expression has been diminished by developing tools for making art, or that the new “AI” tools will be different in this regard.

5. Socioeconomic concerns pose as artistic judgements

New art technologies don’t exist in a vacuum—they inherit the sociopolitical issues around them. Inevitably, people conflate these external issues with the artistic, aesthetic ones (perhaps combined with a bit of status quo bias).

I argue that it’s better to keep them separate: if we want to understand how the art will evolve in the future, we shouldn’t present our socioeconomic concerns as artistic judgments. This may be difficult; I suspect it’s easier to accept a new artistic style when it doesn’t make you feel threatened. I don’t think “Old Town Road” was removed from the Country Music charts for purely definitional reasons.

Hip-hop came from dual turntables and faders, deployed in funk party culture in poor New York neighborhoods. When hip-hop blew up in the 1980s, the backlash said “hip-hop isn’t really music” or “it’s degenerate.” While this was in part about aesthetics (when “it’s not music” means “it doesn’t sound like the music I grew up with”), it was mostly racism. That is, people disguised their racism as artistic judgements.

Baudelaire’s argument against photography expressed his rejection of modernity in general; the older generations’ rejection of the rock-and-roll music of the 1950s and 1960s reflected a broader generational conflict around counterculture (and teenage rebellion).

“AI” art. “AI” art suffers from many ethical concerns, which I am deeply ambivalent about. I believe we can be very worried about harm to currently-working artists, while also being excited about the artistic potential of these tools, extending the 60-year history of computer art.

I argue that these ethical issues are not really about art: the ethical issues of “AI” art are instances of the ethical issues of “AI.” Specifically, when it is or isn’t “ok” to train models on peoples’ data is a huge societal question that’s much broader than where artistic training data comes from—even if this ends up being the most visible instance. Racial bias and representation in “AI” models is a much bigger problem than just in image generation. Finally, misleading claims the “AI” software is itself an artist reflects broader dangers of “AI” hype. Perhaps the visibility of “AI” art will force a broader reckoning of some of the problems of “AI” ethics.

When people claim that the new art made with “AI” will be worse than the old art, I hear this as an expression of socioeconomic concerns, like the very valid employment concerns.

Conversely, I recently participated in an architectural panel discussion in which the architects seemed entirely unconcerned about “AI” appropriation—even when generating images in their own firms’ styles. For them, the image is not the final product, and so the technology could only enhance their processes.

In the words of Ted Chiang: “I tend to think that most fears about A.I. are best understood as fears about capitalism. And I think that this is actually true of most fears of technology, too.”

The debate over “AI” art ethics reminds me of the complex debate over cultural appropriation, a conflict of norms around art and different communities that doesn’t have easy answers or resolution, and the important issues get reduced to caricatures. Our previous norms and rules don’t seem quite appropriate to address the underlying problems. Still, I maintain it’s about the societal issues, not the quality of the art. I don’t think anyone publicly argues anymore that White rock-and-roll was objectively worse than the Black music from which it was originally derived; it’s about the way it was made and who benefitted.

Nettrice Gaskins writes about the distinction between appropriation and reappropriation and how the distinction gets very blurry with “AI.”.

Here’s a description, by Barbara Nessim, of how artistic professionals feel threatened by computer technology—from 1983. (source)

6. We rethink what art is

Different eras have had radically different notions of what art “is”, and some shifts in the definition of art have been spurred by technological change.

The distinct concepts of “art” and “artist” didn’t really exist until the societal transformations spurred by the Industrial Revolution.

Photography automated things thought to be the sole domain of human creativity and skill. It led to a rethinking of what “art is” and the Modern Art movement.

Hip-hop grew—in part—from the technology of dual turntables with faders. Hip-hop relied heavily on remix and repurposing existing music, collaging it into something unrecognizably different. This led to a greater appreciation of the role of remix in art, from Homer to Shakepeare and beyond, and the idea that “Everything is a remix”, which complicates simplistic notions of ownership and copyright.

Now, “AI” art promises something similar, as we again attempt to grapple with what it means when algorithms appear to be doing things that we thought were the sole domain of human creativity, and we need to revise our understanding of what an artist does—what wasn’t creativity but skill, what it means to make art with these tools, and what is the real essence of human creativity.

Is it different this time?

Extrapolating from all these trends risks missing the ways this time could be different. But, so far, many of the claims about how it’s different seem hyperbolic to me, ungrounded in the reality of how these algorithms actually work, for example, claiming that diffusion models work by copying the data, or by presenting “the AI as artist” as a serious possibility.

The new algorithms seem different, automating things that we used to think that only artists could do. But this has been true before: photography also automated things that we previously thought only artists could do. The fact that now seems different just attests to just how radically photography transformed our understanding of art. I think that, when the dust settles—and the tools evolve—we’ll get better at discerning which kinds of outputs are of the sort that “any idiot can do that” versus which reflect real artistic skill and talent.

Moreover, the common trends and the underlying mechanisms line up so well between the current “AI” art and the past examples. A machine automates some stages of the process that people thought was essential to what artists did. These algorithms are generative art, and people have been making generative art for decades. These algorithms automate more of the generative process than before.

There are a few key differences between previous iterations that change the discussion. First, the misleading notion of “artificial intelligence” distorts the discussion, and the mistaken impression that computer algorithms can be artists is stronger. Second, the sourcing of training data may change the societal response.

Regardless, art is social, it is about connection between humans, and computers can never replace human authorship. The questions are how authorship is expressed, and how ownership and control shift.

Thanks to Mick Storm, Cassidy Curtis, Rif A. Saurous, Serge Belongie, Juliet Fiss, and others who commented.