A Catalog of “AI” Art Analogies

Since deep neural networks are hard-to-interpret mathematical functions, discussions and debate over “AI” art is partly expressed in analogies. Analogies are useful: they help us identify how the new thing might end up being similar to old things. The ability to think with analogies may even be key to ingenuity. But they hide differences. Each analogy are useful in some ways, misleading in others.

The choice of analogy may reflect the speaker’s agenda. Those of us who compare “AI” Art to photography emphasize its similarity to previous artistic technologies that seemed to automate art but came to be widely accepted. But the photography analogy downplays the impact on real peoples’ careers. On the other hand, people that say it’s just direct copying argue that there’s no value whatsoever in “AI” art. At times, analogies serve as footsoldiers in a rhetorical battleground.

In this post, I catalogue a few of the analogies for “AI” art, how they are useful, and how they’re misleading. My previous blog post delved into a few of these precedents in far more depth; read that post for a deeper analysis.

UPDATE (10/5/23): Since I started this page, more and more analogies have appeared. Two that seem particularly illuminating (but I haven’t studied closely) are:

Photography

The most common analogy for computer-generated and procedural art, e.g., I used the analogy in my thesis back in 2001; I suspect many people come up with this analogy independently.

How It’s Useful: Some of the most naive criticisms of “AI” art (“you just pushed a button!” “the machine did all the work!” “this will replace artists”) were things that people said about photography. If your criticism of “AI” is also something that people could say about photography, then maybe it’s a naive criticism.

The Subtext: “AI” art is another tool for art, we just don’t appreciate it yet. It will become an accepted, essential tool in our society, just as photography did.

How It’s Misleading: Dismisses short-term impacts. Disregards the role of training data, including the ethics of where the data came from and how data biases affects the outputs.

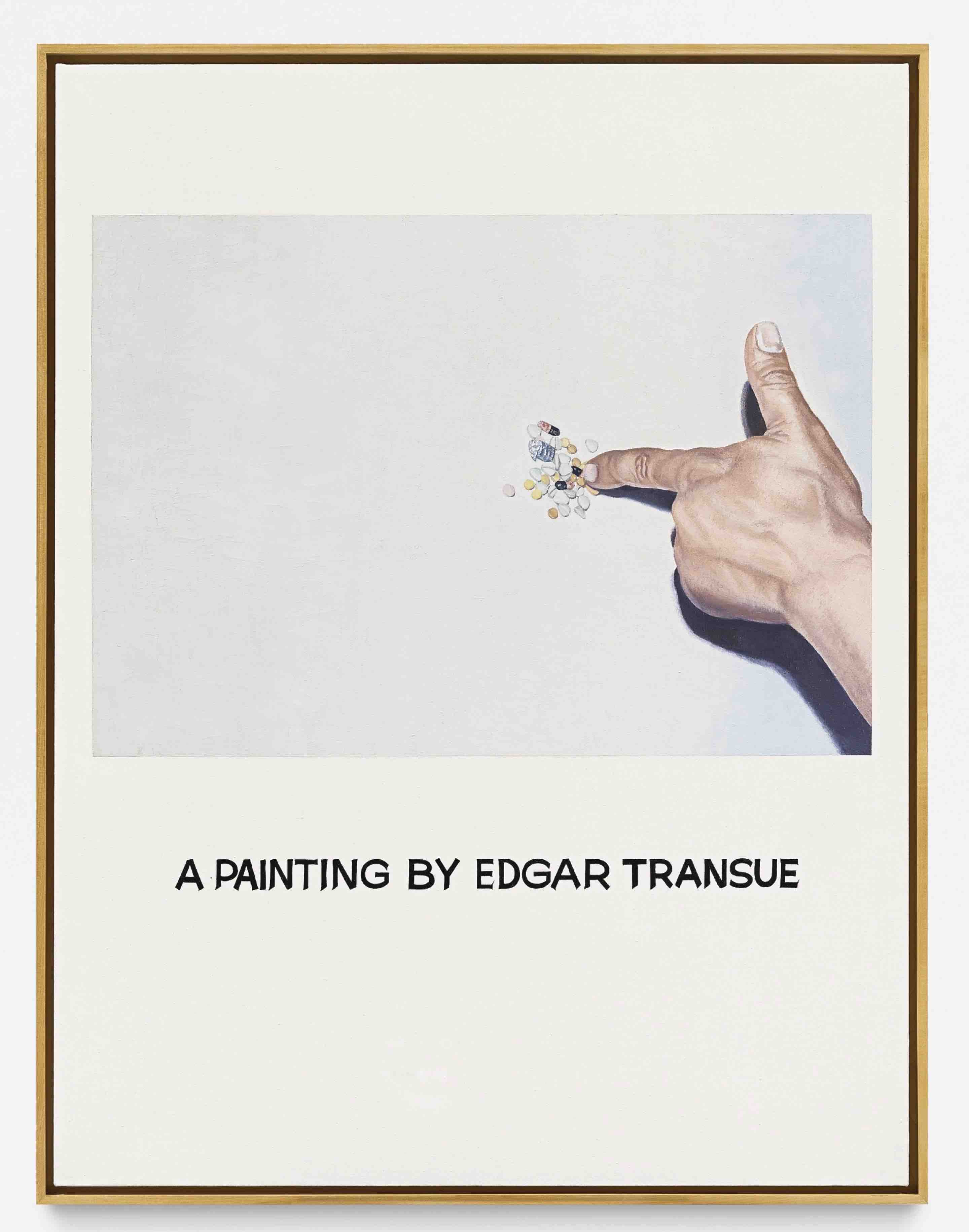

Conceptual Art

As a product of conceptal artists—such as Marcel Duchamp, Sol LeWitt, and John Baldessari—contemporary art emphasize the artist’s driving idea over the execution.

How It’s Useful: Providing instructions to software is much like Sol LeWitt or John Baldessari providing instructions to a painter. Ideas about technical skill and craft being essential to art are old-fashioned.

The Subtext: Anything you do can be art, therefore “AI” art is art.

How It’s Misleading: Most art in our world isn’t conceptual art, or even contemporary art. Aesthetics, skill, and effort are important for most kinds of art that we care about.

Theft

Critics of text-to-image say that it’s theft.

How It’s Useful: It could unjustly deprive people of income.

The Not-So-Subtext: “AI” art is unethical and immoral.

How It’s Misleading: “Theft” implies taking a physical object, like breaking into your home and taking a painting, or transferring digital assets, like draining a bank account. Copying an image file is very different: if I copy your JPEG, you still have the JPEG. Unlike physical ownership, copyright is not absolute ownership, and expanding copyright too much can be harmful for artists and society.

Appropriation

Appropriation refers to at least two distinct, complex concepts: appropriation art (a form of conceptual art), and cultural appropriation. I haven’t actually heard these analogies made much, but I think they’re useful.

How It’s Useful: Both Appropriation Art and Cultural Appropriation are complex, difficult topics. Cultural mixing and remix is vital and beneficial to culture, but can be done in harmful ways. Sometimes appropriation is “ok” and sometimes not (e.g., compare Sherry Levine vs. Richard Prince vs. Roy Lichtenstein), and there’s no simple rules about which is which, and no consensus either.

The Subtext: It’s complicated.

How It’s Misleading: The fact that it’s hard to draw a line about what’s “ok” or “not ok” is sometimes taken to mean that you shouldn’t try, and thus either nothing is “ok” or everything is “ok.” Unfortunately, the concept of cultural appropriation has been reduced to caricature, making it easier for some people to dismiss it.

How Humans Learn to Make Art

Advocates say that training models from data is just like how humans learn to make art and to get inspired from other art.

How It’s Useful: We often underestimate the role of influence and inspiration on art, and the way that everything is a remix. All art is based on other art in some way; people learn by imitation and copying; Homer, Shakespeare, and Van Gogh were all working from specific traditions and building from previous examples, specific to their times and places; the idea of the genius artist that invents everything from scratch is a myth. All art, in some way, draws from previous art.

The Subtext: Training a model on data is a completely valid thing to do.

How It’s Misleading: To say that an “AI” algorithm works “just like” how a person does is wrong and misleading. Computers are not people, and humans are not CPUs. We are not explainable by algorithms, and we have a higher moral status than machines. Even the term “machine learning” is misleading when it leads people to equate ML with human learning. To say that “it’s just like how humans learn” dismisses legitimate concerns with the ways these algorithms process data—and, besides, there are limits on when it’s ok for humans to copy.

Sampling and Collage

In music, sampling involves taking bits sounds and reusing them in new ways, from musique concrète to hip-hop and beyond. In visual art, collage and photomontage involve cutting out photos and putting them together in new ways.

How It’s Useful: Remix in hip-hop and electronic music revealed to a lot of people how reusing existing elements can be transformative and create new art forms.

The Subtext: “AI” art is transformative, therefore it is a valid and legitimate artform. Or: “AI” art is directly copying examples, therefore it’s invalid and illegal.

How It’s Misleading: The preliminary evidence that these models perform widespread copying is very weak, and there are effective memorization mitigations, but more research is needed to really understand what they’re doing. Whether they’re copying bits and pieces hasn’t been studied yet and may not be easy to study in a rigorous way, because of combinatorics and because you can’t just report every match as being a copy (as one paper has done).

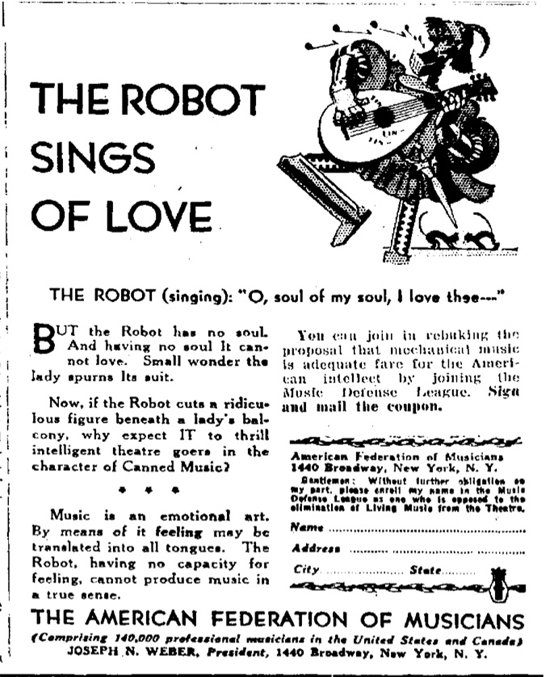

Music Recording

In the 1920s and 1930s, musicians unions fought against the introduction of recorded music, including an advertising campaign against “robots” that seems familiar today, and downright silly in retrospect. For example, the ad below asserted that recorded music isn’t really music, like people today saying that “AI” art isn’t really art.

How It’s Useful: It’s a previous example of technological disruptions to art and employment, changing the availability of jobs but creating a tool that most of us now take for granted. Musician unions were able to advocate for some protections for their members in spite of change.

The Subtext: Technological change happens, get over it.

How It’s Misleading: Music recording is, first and foremost, about distribution platforms, although it enabled new kinds of art as well (e.g., AOR). The role of distribution platforms (like streaming) is a related but somewhat different topic, and one that arguably has bigger implications for creators.

Lava Lamp

In the past week, two high-profile art critics called an AI artwork a “lava lamp,” (Ben Davis, Jerry Saltz), and a third used the term for AI art in general.

How It’s Useful: Something can be dazzling but otherwise hollow of meaning or significance.

The Not-So-Subtext: This stuff is just gimmicky eye-candy.

How It’s Misleading: Dismisses the value of experimentation, form, and the many great things that have already been created with these techniques that are more than just visuals.

Parrots

Large Language Models (LLMs) are not sentient or intelligent, they are trained to produce high-probability sequences of text similar to a training corpus, like a parrot repeating things it has heard, without understanding.

How It’s Useful: You can generate valid text without understanding. Deflates the wrong, misleading notions of AI sentience and intelligence.

The Not-So-Subtext: These things are convincing nonsense generators.

How It’s Misleading: The analogy doesn’t really resonate because parrots only repeat isolated words or phrases. A parrot can’t compose a coherent essay for you. LLMs are much richer models than just repeating sentences.

A Blurry JPEG

Ted Chiang wrote an article entitled “ChatGPT Is a Blurry JPEG of the Web”.

How It’s Useful: Conveys the important concept that the model is lossily fitting and encoding a dataset.

The Subtext: It’s regurgitating the data.

How It’s Misleading: Like many of these analogies, it suggests these things are just memorizing or collaging the data, rather than forming new, plausible combinations of it.

AI as Theatre

I very much like this piece called “Interface as Stage, AI as Theater”; haven’t had a chance to absorb how it fits in this list.