How to Draw Pictures, Part 3: Style

In two previous blog posts, I described simple models for drawing. So far, these drawings were relatively plain. But, we can expand on the basic techniques to get a really broad and expressive range of artistic rendering styles.

Stroke style

As already shown, we can use different styles of outline strokes, which includes both drawing brush textures, as well as changing the shapes of the outlines. We can even animate them. Here’s an example of applying different kinds of strokes to animations of 3D models:

Skip to 4:15 in the video to see stylized 3D stroke animation

Shading and Hatching

Of course, we might draw inside the shapes. The most popular and well-known non-photorealistic shading algorithm is toon shading, which is widely used in games and animations:

Other notable examples of 3D NPR in video games include Return of the Obra Dinn, Ōkami, Team Fortress 2, Borderlands 2, and Valorant.

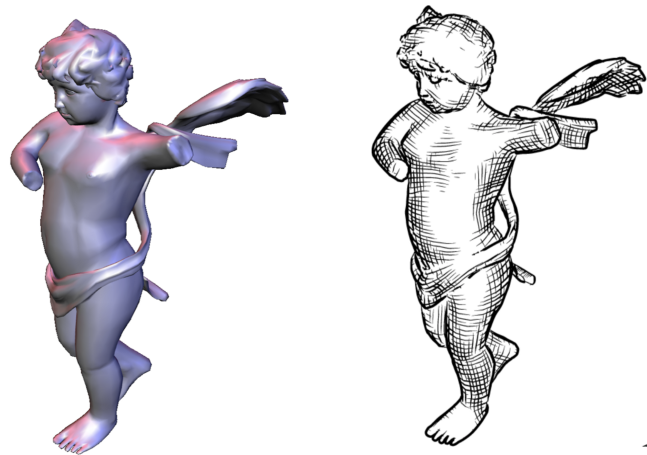

But there are lots of other ways to draw objects. For example, hatching is widely used in pencil and pen illustration. Here are the results of an algorithm that we developed to draw hatching illustrations:

In order to hatch a surface, we need to determine the hatching directions, as well as their density. In the above illustration, the density is based on a shading: darker regions have cross-hatching. To determine the directions, we notice from looking at real drawings that people often draw lines along the directions in which the surface curves the most and the least (formally, these are called the principal curvature directions). Perceptual psychologists have also found that, in isolation, people tend to perceive hatch lines as curvature directions. We can also let a user author the rendering style. Here is an animation method that applies the Image Analogies approach to 3D animation:

Stylizing Animation By Example</a>

There are lots of other ways to draw objects, whether watercolor, oil; more or less “impressionistic,” and so on.

Most computer animation and games that build on these techniques are essentially creating new visual artistic styles, mashing-up conventional line drawing and painting with computer graphics algorithms. An example is the lovely Paperman animation, which combines contour tracking and toon shading—driven by an enormous amount of authoring and creativity from by professional animators:

Pictorial design

All of these rendering styles so far are still relatively “rigid:” they carefully draw each line exactly where linear perspective projection says it should be. However, artists do not normally draw in linear perspective, and sometimes they play with perspective to dramatic effect. Here is a 1914 painting by Giorgio de Chirico, which uses multiple perspectives to give a sense of dissonance and melancholy, and a 3D rendering inspired by it:

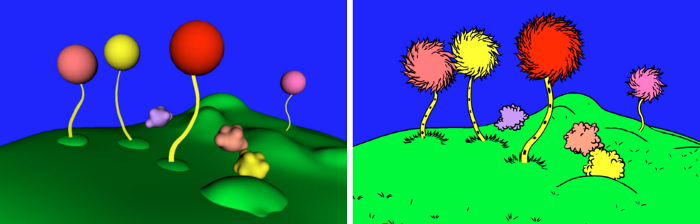

The 3D rendering on the right uses different camera projections for the three separate objects, violating conventional linear perspective. Many other elements of drawing and rendering depend on their appearance in the image. For example, this method for Dr. Seuss-style rendering adapts the density of image elements to image space, and makes sure they always face the viewer:

Here is a video of that system in action (albeit low-resolution, and suffering from video transfer artifacts).

Defining Style and the Science of Art

The main motivation for this research has been to create new tools for artists. However, the algorithms I’ve described in these blog posts also provide a generative theory for representational art. The more different styles they can create, starting from a relatively small set of concepts — such as contours, stroke styles, and shading styles—the more they could potentially provide a scientific description of how we draw and paint. Furthermore, these building blocks of strokes, shading, and pictorial styles provide a useful way to categorize and understand artistic style.

Despite all these successes so far, there remain many open research problems. How do we programmatically define the space of styles, and there are still significant new technologies needed? What user interfaces can we use to author styles? Can we combine these techniques with machine learning and style transfer?

For a longer discussion of how these models can help create a science of art, see my NPAR 2004 paper, and, as well, for how they can help us classify and define style, see this 2005 paper by Willats and Durand.

These generative models are only half of the whole story. To really understand the science of art, we also need to study the visual perception of art. I’ll discuss this topic in a future series of blog posts.

In Part 4 of this series, I go deeper into the topic of line thickness.